Equitable AI Cookbook#

Equitable AI involves creating and using artificial intelligence systems in a fair and just manner, ensuring they do not reinforce existing biases or discriminate against certain groups. It focuses on fairness, transparency, accountability, inclusivity, and ethical considerations to prevent unfair outcomes and promote equal opportunities for all individuals and communities.

Note

This cookbook is a work-in-progress. You can contribute to the development by opening new issues and pull requests on Github.

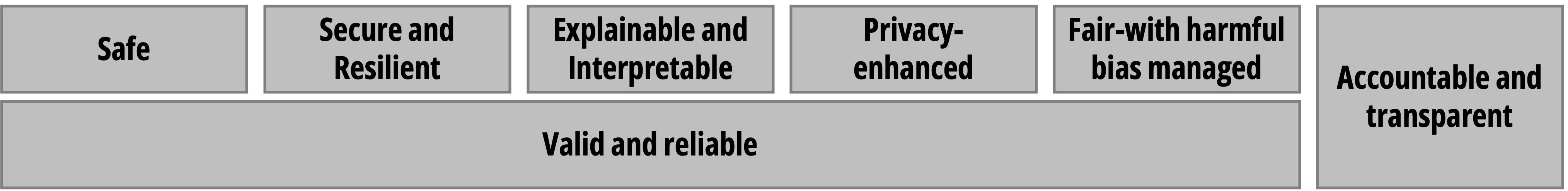

In this cookbook, equitable AI refers to a broad term to cover responsible[1], trustworthy[2] and inclusive AI[3] development. These terms also requires establishing other characteristics such as security, privacy, and fairness. For example, NIST AI RMF[2] defines trustworthiness with seven sub-components: Safe, secure and resilient, explainable and interpretable, privacy-enhanced, fair with harmful bias managed, valid and reliable, and accountable and transparent. Each subcomponent requires a careful evaluation based on specific use cases. In this sense, equitable AI is a combination of various established research areas.

This cookbook is an effort to bring best practices from the literature and share some hands-on practical experiments to take some confident steps for achieving trustworthiness in the era of large foundational models. “Best practices” can widely refer to a set of techniques, methodologies, processes, or guidelines that are accepted as superior or most effective in a particular field or context. When the desired outcome is achieving equal access/outcomes/participation/benefits and trustworthiness, defining best practices becomes challenging, as these are complex sociotechnical terms.

This repository contains an extensive list of techniques and experiments as an initial effort to achieve this goal in the “digital economy” domain. In the digital economy domain, we selected three use cases:

Fair Use of LLMs in Finance

Developing more fair and equitable applications (e.g. credit scoring, financial news sentiment analysis, market prediction) powered by AI.

A Fairness Benchmark for Facial Biometrics

Improving biometric facial verification using synthetic data generated by foundational models (e.g. diffusion models)

In a complex product development ecosystem, it is hard to achive “trustworthiness,” as all sub-modules of a product is expected to achieve trustworthiness. Therefore, the desirable way to achieve trustworthiness is following a proactive “by design” approach in the product design. In this cookbook, we explored the ways to achieve this kind of proactive approach, however implementing them in a product lifecycle is still up to technical capacity of development teams.

Contents#

Although implementing “best practices” in equitable AI domain requires a cross disciplinary effort, we focused on the sociotechnical and technical techniques, particularly fairness, security and privacy. We do not go into details on governance, auditing techniques, comprehensive assurance.

AI Safety

A Proactive Monitoring Pipeline

Data Practices

Fairness

Use Case: Finance

Use Case: Biometrics

References